My biggest fear when it comes to the current architecture of my HomeLab is that the computer I use as a server acts as a single point of failure.

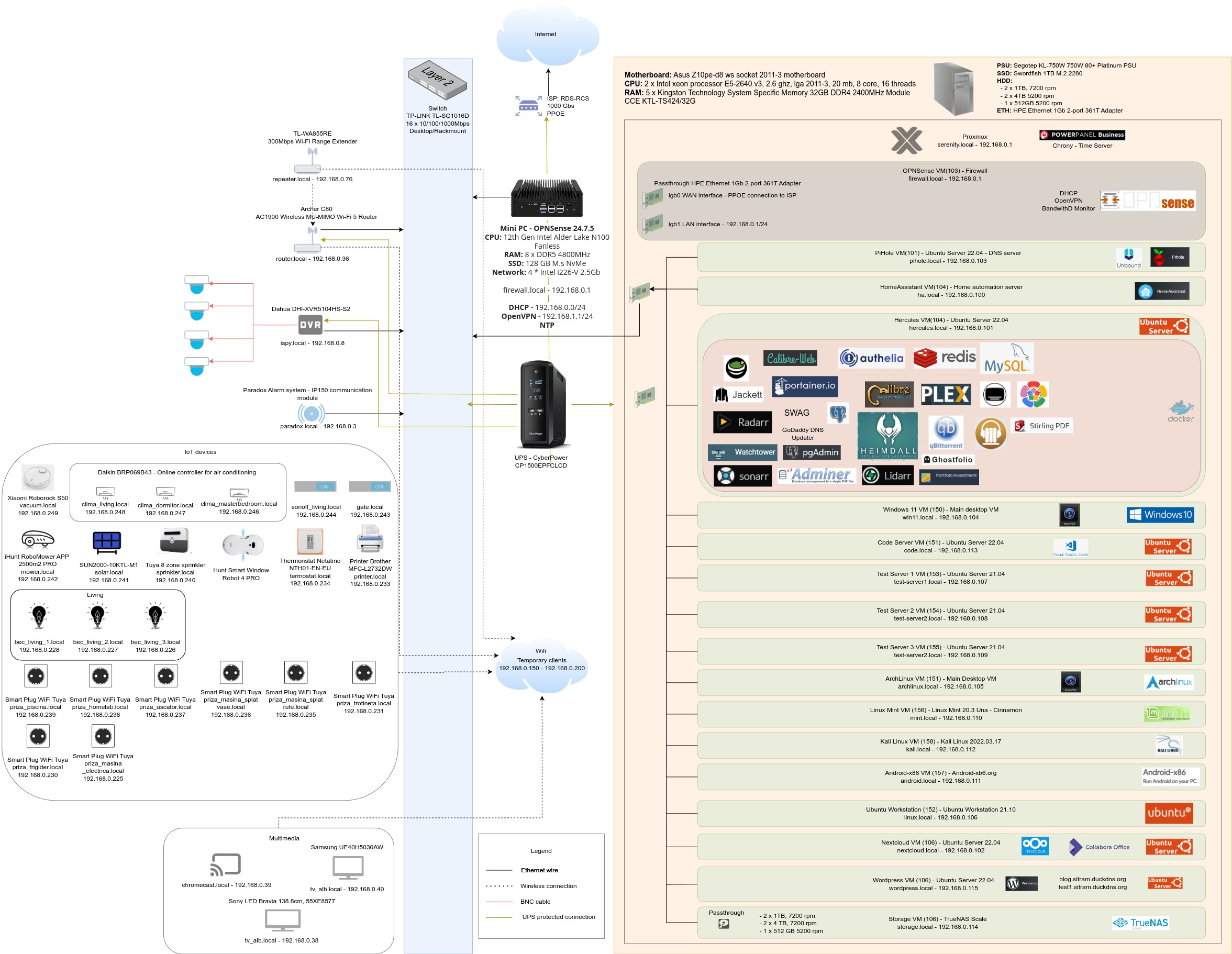

Looking at the definition from Wikipedia, SPOF is a system or a part of a system that, if it fails, will stop the entire system from working. If I consider my entire HomeLab as a system that offers various functionalities to my house, the main server is the the subsystem that is the SPOF. I have other subsystems, like security cameras, wireless routers, the security alarm and various IoT equipment which are independent. If they fail, I won’t have access to a certain functionality. The main server however, binds everything together, supports additional subsystems and acts as the gate to access the Internet. This is why I consider it as a single point of failure.

From everything that is hosted on the main server, the VM that runs pfSense and acts as DHCP server and firewall for my local network, is the critical one. If that one fails, the entire house remains without access to Internet. I have as backup the option to use mobile data plan from my phone, but the connection in the area where I leave is very bad.

Recently I experienced my first panic caused by having the main server offline. There was a power shortage which lasted for almost 40 minutes. This is a bit more than what my UPS can handle. After about 30 minutes, the UPS successfully shut down the server. It was a relief that I configured this feature correctly, because since almost a year since I added the UPS, I didn’t get to test this functionality.

When the power came back, I started the server and, after 10 minutes, I was surprised that I still couldn’t access its web interface. Usually it only takes a few minutes to be able to access Proxmox interface after a server reboot. I went to the closet where the server is physically located and, to my horror, I saw that the booting process was stuck. I tried to boot an older versions of the kernel, maybe the latest version had some bug. Unfortunately I got the same behavior. Next, I removed the quiet mode to begin troubleshooting this issue. The service which set up the network was the one causing issues. It looked like it was waiting for something and because the service had no timeout, it remained in this state forever.

I started to search for similar symptoms online and after about an hour of research, I came across this wiki entry from Proxmox. The page said that there the network setup service would get stuck in Proxmox 8 if ntp and ntpdate was used. This looked very similar to my symptoms and I knew one of the services running was ntp which provides synchronized time to all the clients from my local network. I quickly started in recovery mode, uninstalled ntp and ntp-date and rebooted the server. The booting process was finalized with success and everything came back online. You cannot imagine my relief when I realized that this issue was solved.

Even if the fix turned out to be a simple one and the downtime was only a few hours, I got scared. This made me begin to think about the robustness of the architecture I use for my HomeLab and what improvements I could make. As I said above, my server binds a lot of subsystems together and offers additional services to my house. If it goes offline, I’m left in the dark. From everything that runs on the server what services are critical?

After a lot of thinking, I came up with two candidates:

- pfSense

- TrueNAS

pfSense VM acts as a firewall and DHCP server for my entire house. It maintains the PPPoE connection to my ISP which provides access to Internet. TrueNAS VM acts as a NAS server. It manages backups to all my VM’s, various documents and media content.

Both of these servers run in virtual machines on the main server. When the server goes offline, I lose access to Internet, my data and backups. So what can I do to improve this situation?

First option is to move both of these VM’s to dedicated hardware. If the main server goes offline, at least the critical services provided by these two servers would continue to be available. For the fpSense a micro pc should be enough, as long as it has 2 Gigabit Ethernet ports. For TrueNAS, there are a lot of specialized NAS computers which can support my 5 HDD’s. My HomeLab would increase from one PC to three. The downside of this solution is a higher upfront cost for new equipment, higher energy consumption and higher maintenance cost in case of hardware failure. The reason I bought the main pc in the first place was so that I could run everything on a single hardware, reduce the energy consumption and the maintenance cost. If I start migrating everything to separate hardware, what’s the point of having the main server?

A second option is to migrate only one of the virtual machines to dedicated hardware. Out of the two VM’s I will probably chose the pfSense because having constant access to Internet is more important than having access to my data and backups. The downside to this solution is the upfront cost with new hardware, higher energy consumption and not having an easy access to my backups in case of failures of the main server.

A third option is to keep everything as it is. The downside to this solution is that in case of a hardware failure on my main server, I won’t have access to Internet or to my backups. Not having access to Internet makes debugging a failure very difficult. I could use the data plan on my mobile phone, but it’s very slow.

It turns out that increasing the robustness of my HomeLab architecture is not an easy task. Stay tuned to see which option I will chose.